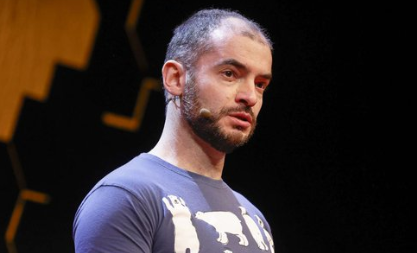

The man is considered top of the line when it comes to AI. What he is warning us all about should make everything stop and think.

Ilya Sutskever, a former chief scientist and co-founder of OpenAI, has launched a new venture focused on AI safety. Sutskever left OpenAI earlier this year after a tumultuous period, which included a contentious attempt to remove CEO Sam Altman. Reports indicated that Sutskever, along with others, was concerned that OpenAI was prioritizing the commercial potential of its technology over the safety concerns it was initially established to address.

Sutskever’s new organization, Safe Superintelligence, aims to ensure that the development of AI technologies is aligned with safety principles. The firm’s mission is encapsulated in its name and product roadmap, emphasizing the creation of secure and reliable AI systems. The launch statement on Safe Superintelligence’s website outlines its approach of addressing safety and capabilities simultaneously as technical challenges to be tackled. The goal is to advance AI capabilities rapidly while ensuring that safety measures remain a step ahead.

Former OpenAI Chief Scientist Ilya Sutskever announced he is forming a new company called Safe Superintelligence, Inc. (SSI) with the goal of building “superintelligence,” a form of artificial intelligence that surpasses human intelligence, in the extreme.https://t.co/WwQ3cmlmgn

— Gvnzng – personification of chaos – Time Traveler (@gvnzng) June 20, 2024

Critics have expressed concerns that major tech and AI companies are overly focused on commercial gains at the expense of safety. This sentiment has been echoed by several former OpenAI staff members who left the company, citing these very issues. Elon Musk, another co-founder of OpenAI, has also criticized the company for straying from its original mission of developing open-source AI to concentrate on commercial interests.

Addressing these concerns, Safe Superintelligence’s launch statement highlights its commitment to safety and progress without the distractions of management overhead or product cycles. The business model is designed to insulate safety, security, and technological advancement from short-term commercial pressures, ensuring a singular focus on developing safe AI.

ChatGPT maker OpenAI’s co-founder and former chief scientist Ilya Sutskever said on Wednesday that he was starting a new artificial intelligence company which is focused on creating a safe #AI environment.https://t.co/eoN5d5ybhi

— China Daily Hong Kong (@CDHKedition) June 20, 2024

Sutskever’s departure from OpenAI came after his involvement in the high-profile attempt to oust Sam Altman as CEO. Following Altman’s quick reinstatement, Sutskever was removed from the company’s board and eventually left in May. Joining him at Safe Superintelligence are former OpenAI researcher Daniel Levy and former Apple AI lead Daniel Gross. Both are co-founders of the new firm, which has offices in California and Tel Aviv, Israel.

The trio positions Safe Superintelligence as the world’s first dedicated lab for developing safe superintelligence (SSI). The company’s singular goal is to address what they describe as the most important technical problem of our time: creating a safe superintelligence. By focusing exclusively on this objective, Safe Superintelligence aims to pioneer advancements in AI that are not only powerful but also secure and trustworthy.

Key Points:

i. Ilya Sutskever, former chief scientist and co-founder of OpenAI, has launched a new organization focused on AI safety called Safe Superintelligence.

ii. Safe Superintelligence aims to address AI safety issues and ensure that safety measures are always ahead of technological advancements.

iii. The new organization emphasizes solving safety and capabilities as technical problems simultaneously and insulates its operations from short-term commercial pressures.

iv. Sutskever left OpenAI after concerns that the company was prioritizing commercial gains over safety, a sentiment echoed by former colleagues and co-founder Elon Musk.

v. Joining Sutskever at Safe Superintelligence are former OpenAI researcher Daniel Levy and former Apple AI lead Daniel Gross, with offices in California and Tel Aviv.

James Kravitz – Reprinted with permission of Whatfinger News